Introduction

Inter-rater reliability analysis measures agreement between multiple raters who evaluate the same subjects or items. Traditional methods include Cohen's Kappa for two raters, and Fleiss' kappa, Gwet's AC2, or Krippendorff's alpha for multiple raters. While Excel offers basic statistical tools for these calculations, it requires manual data manipulation and formula creation.

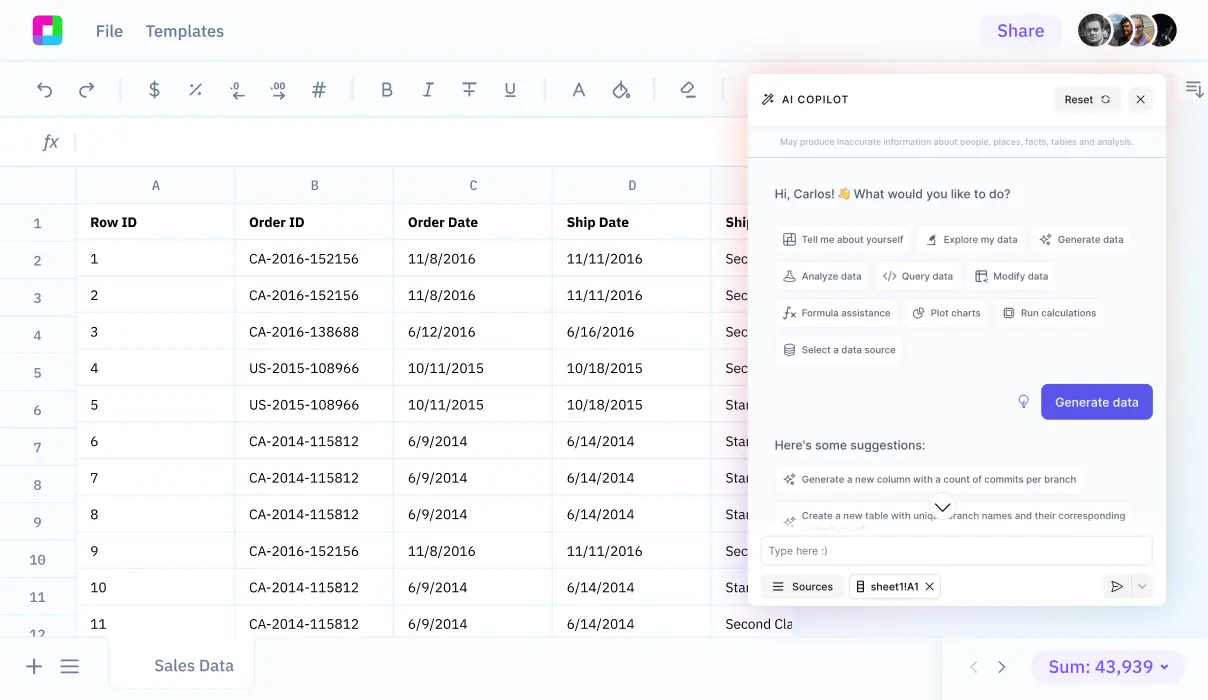

Sourcetable, an AI-powered spreadsheet alternative, eliminates the complexity of statistical analysis. Instead of wrestling with Excel formulas, you simply tell Sourcetable's AI chatbot what analysis you need. Upload your data or connect your database, and Sourcetable's AI will handle the calculations, visualizations, and insights automatically.

Learn how to perform Inter-Rater Reliability Analysis using Sourcetable's AI features at https://app.sourcetable.cloud/signup.

Why Sourcetable Is Best for Inter-Rater Reliability Analysis

Inter-rater reliability analysis is essential for evaluating observational measures in experimental and quasi-experimental designs. While both Sourcetable and Excel can perform this analysis, Sourcetable's AI-powered approach transforms how researchers work with data.

AI-Powered Analysis

Sourcetable eliminates the complexity of traditional spreadsheet functions. Its conversational AI interface lets researchers perform inter-rater reliability analysis by simply describing what they want to analyze, making the process intuitive and efficient.

Streamlined Data Handling

Researchers can upload their reliability data via CSV or Excel files, or connect directly to databases. Sourcetable's AI chatbot then guides users through the analysis process, from data preparation to final results.

Advanced Statistical Capabilities

Sourcetable supports Krippendorff's Alpha, a sophisticated inter-rater reliability metric that handles incomplete data sets, varying sample sizes, and different numbers of raters across all measurement levels - capabilities not found in traditional metrics.

Excel Comparison

While Excel requires manual function input and deep statistical knowledge, Sourcetable's AI interface makes complex analyses accessible. Both tools show similar reliability levels, but Sourcetable's conversational approach and automated analysis capabilities make it the superior choice for efficient research.

Benefits of Inter-Rater Reliability Analysis with Sourcetable

Inter-rater reliability analysis ensures data consistency and reliability across multiple observers. This analysis is crucial for validating study results and adds credibility to qualitative research findings.

Why Choose Sourcetable for Inter-Rater Reliability Analysis

Sourcetable, an AI-powered spreadsheet platform, transforms how you analyze data through natural language interaction. Simply upload your reliability data files or connect your database, then tell Sourcetable's AI what analysis you need. The platform handles complex calculations and data processing automatically.

The platform's AI capabilities automate repetitive tasks, minimize errors, and improve accuracy in data analysis. Instead of manually working with Excel functions, users can simply describe their analysis needs in plain language, and Sourcetable's AI will execute the appropriate calculations and generate results.

Sourcetable enhances productivity through advanced data visualization features, surpassing Excel's basic charting capabilities. Users can request specific charts and visualizations through natural conversation, making data presentation effortless and more efficient than traditional spreadsheet tools.

Examples of Inter-Rater Reliability Analysis in Sourcetable

Sourcetable, an AI-powered spreadsheet alternative, simplifies inter-rater reliability analysis through natural language commands. Using Sourcetable's AI chatbot, you can easily analyze agreement between raters for methods like Cohen's Kappa (scoring from -1 to 1). This analysis is valuable for medical diagnosis scenarios, where two doctors evaluate patients for conditions like depression, personality disorders, or schizophrenia.

For continuous data, simply tell Sourcetable to calculate the Intraclass Correlation Coefficient (ICC), which provides reliability measurements from 0 to 1, with higher values indicating stronger agreement. Percentage Agreement calculations are also available through simple AI commands, offering basic measurement of rater agreement proportions.

Advanced Analysis Methods

By uploading your data or connecting your database to Sourcetable, you can leverage its AI to perform Light's Kappa, Weighted Kappa, and Fleiss Kappa analyses. Fleiss Kappa particularly helps with scenarios involving multiple raters, with Sourcetable's AI handling all the complex calculations automatically.

Healthcare Applications

In healthcare settings, these reliability analyses enable systematic evaluation of AI model quality and clinical utility. While 80% agreement is the recommended threshold for reliability, healthcare studies often require stricter standards. These methods help validate scoring rubrics and ensure consistent expert ratings across medical assessments.

Use Cases for Inter-Rater Reliability Analysis with Sourcetable

Clinical Psychology Assessment |

Two psychologists evaluate 50 patients for psychotic, borderline, or normal conditions. Use Sourcetable's AI chatbot to automate kappa calculations and generate visualizations of rater agreement patterns. |

Drug Use Research |

Compare questionnaire and interview responses from 50 students regarding drug use frequency. Upload data files to Sourcetable and use conversational AI to analyze cross-method reliability. |

Medical Diagnosis Validation |

Evaluate agreement between two diagnostic methods for child mortality assessment. Sourcetable's AI assistant performs complex reliability calculations and generates comprehensive statistical reports through natural language commands. |

Behavioral Analysis |

Multiple raters code subject behaviors using categorical variables. Connect your database to Sourcetable and let AI analyze inter-rater reliability across multiple categories and raters automatically. |

Literature Review Classification |

Multiple reviewers categorize research papers for systematic review. Sourcetable's AI processes uploaded classification data and generates agreement metrics through simple conversational prompts. |

Frequently Asked Questions

What is Inter-Rater Reliability Analysis and why is it important?

Inter-rater reliability is a statistical measure that determines the degree of agreement among independent observers who rate, code, or assess the same phenomenon. It's essential in clinical research, social sciences, and education to ensure assessment tools are valid and research findings maintain integrity through consistent assessments across multiple raters.

How can I perform Inter-Rater Reliability Analysis using Sourcetable?

Sourcetable's AI-powered interface makes it simple to perform Inter-Rater Reliability Analysis. Just upload your data file or connect your database, and tell Sourcetable's AI chatbot what analysis you want to perform. The AI will automatically calculate the appropriate statistics, whether you need Cohen's Kappa, Fleiss's Kappa, or other reliability measures, without requiring complex formulas or manual calculations.

When should I use different Inter-Rater Reliability measures in my analysis?

Use Cohen's Kappa for two raters, Fleiss's Kappa for more than two raters, and Gwet's AC2 or Krippendorff's Alpha when working with ordinal data. Sourcetable's AI can help you determine which measure is most appropriate for your data and automatically perform the calculations when you describe your analysis needs in natural language.

Conclusion

Inter-rater reliability analysis is crucial for ensuring measurement consistency across multiple raters. While Excel offers tools like Cohen's Kappa and Fleiss' Kappa through add-ons, these methods require manual setup and statistical expertise. For more complex analyses involving Krippendorff's Alpha or Gwet's AC2, the calculations become increasingly difficult in Excel.

Sourcetable offers an AI-powered alternative that eliminates the complexity of inter-rater reliability analysis. Instead of wrestling with Excel functions and formulas, simply tell Sourcetable's AI chatbot what you want to analyze and it will handle the calculations, generate visualizations, and guide you through interpreting the results. Whether you're uploading rating data files or connecting to a database, Sourcetable makes inter-rater reliability analysis accessible to everyone. Try Sourcetable's AI-powered analysis tools at https://app.sourcetable.cloud/signup.